Create an Incremental Snowpark Transform

This guide shows you how to build an Incremental Snowpark Transform that processes only new or changed data since the last run, significantly improving performance and resource usage for large datasets.

Snowpark is Snowflake's developer framework that enables processing data directly where it's stored using familiar programming languages like Python.

Note that Snowpark is only available to Ascend Instances running on Snowflake. Check out our Quickstart to set up a Snowflake instance.

Prerequisites

- Ascend Flow

Create a Transform

You can create a Transform in two ways: through the form UI or directly in the Files panel.

- Using the Component Form

- Using the Files Panel

- Double-click the Flow where you want to add your Transform

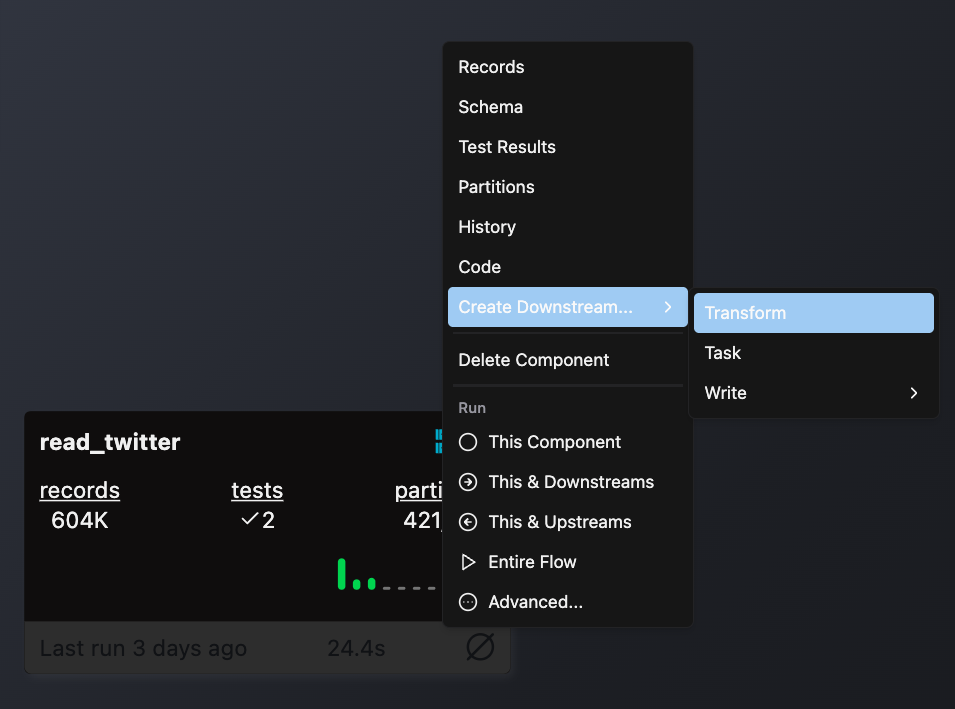

- Right-click on an existing component (typically a Read component or another Transform) that will provide input data

- Select Create Downstream → Transform

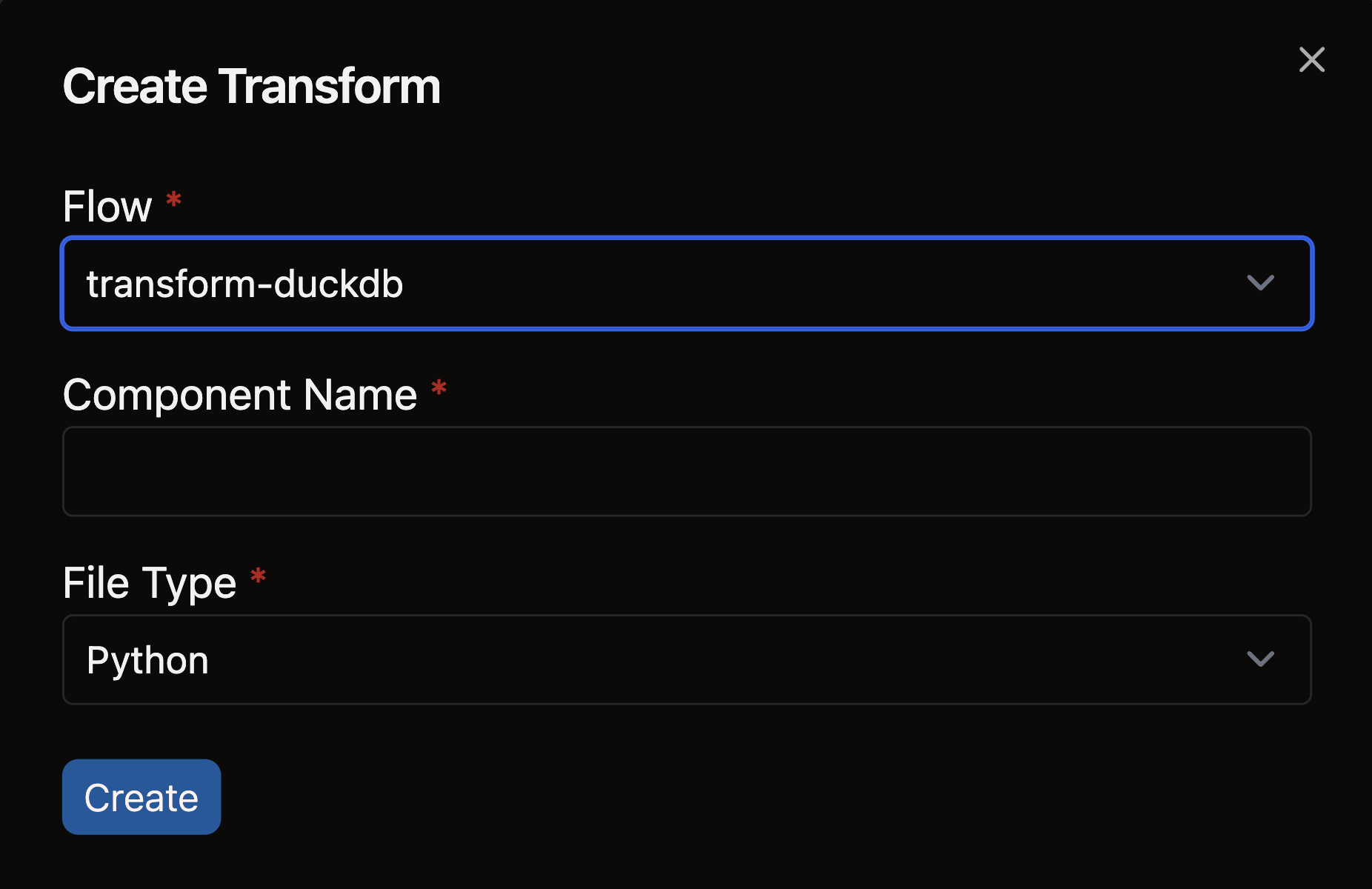

- Complete the form with these details:

- Select your Flow

- Enter a descriptive name for your Transform (e.g.,

sales_aggregation) - Choose the appropriate file type for your Transform logic

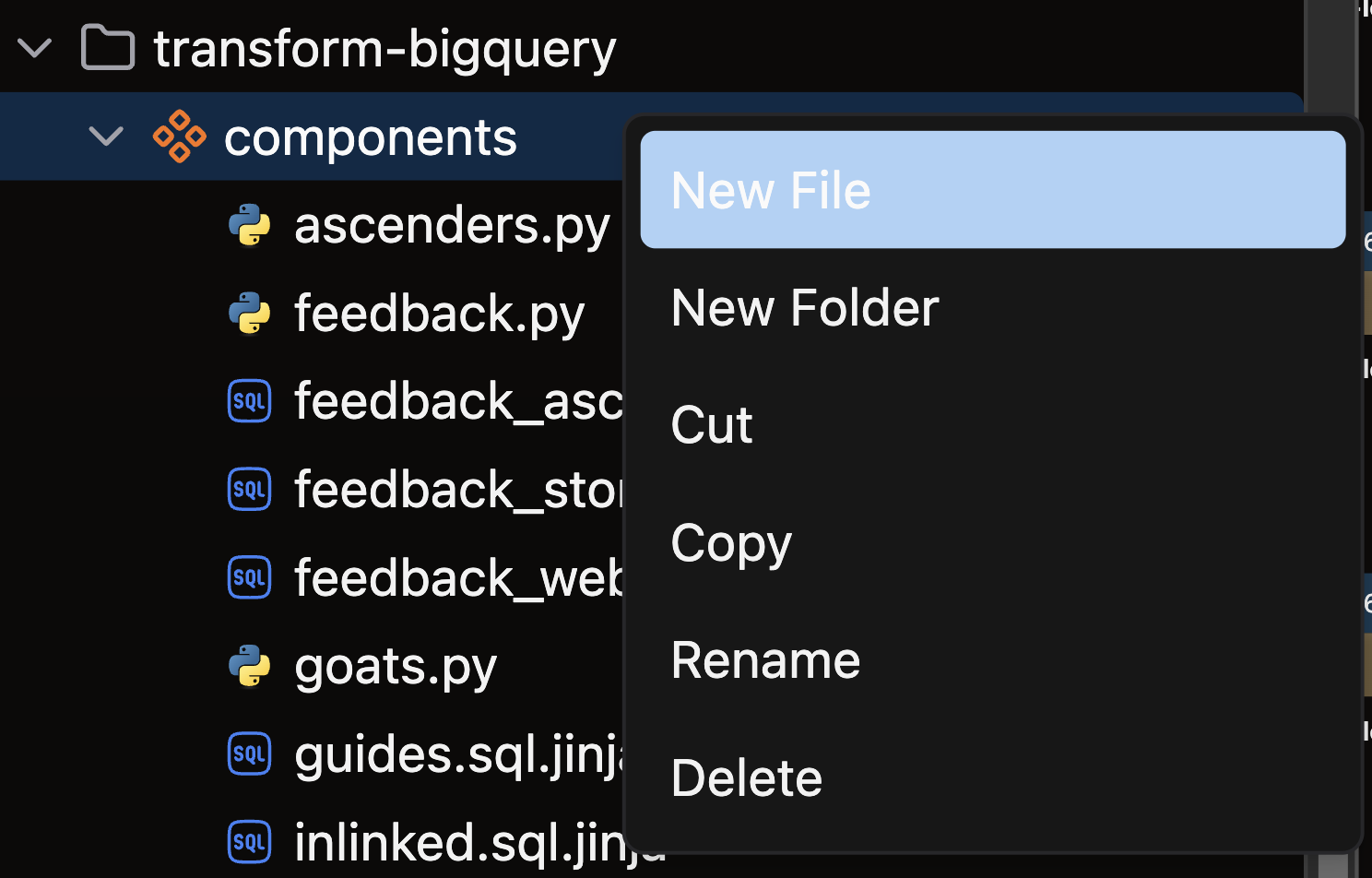

- Open the files panel in the top left corner

- Navigate to and select your desired Flow

- Right-click on the components directory and choose New file

- Name your file with a descriptive name that reflects its purpose (e.g.,

sales_aggregation) - Choose the appropriate file extension based on your Transform type:

.pyfor Python Transforms.sqlfor SQL Transforms

Create your Incremental Snowpark Transform

Follow these steps to create an Incremental Snowpark Transform:

-

Import required packages:

- Ascend resources (

Snowpark,ref) - Snowpark objects (

DataFrame,Session,functions,SnowparkTransformExecutionContext)

- Ascend resources (

-

Apply the

@snowpark()decorator with incremental settings:- Specify your

inputsusing refs - Set

materialized="incremental"to enable incremental processing - Choose an

incremental_strategy: either "append" or "merge"- For merge strategy, specify

unique_keyandmerge_update_columns

- For merge strategy, specify

- Specify your

- Use append when you want to add new records without changing existing ones

- Use merge when you need to both add new records and update existing ones based on a unique key

- Define your transform function:

- Use

context.is_incrementalto check if this is an Incremental run - For incremental runs, query the existing data to determine what's new

- Filter the input data to include only new or changed records

- Process and return the filtered DataFrame

- Use

Examples

- Merge strategy

- Append strategy

Use the merge strategy when you need to both add new records and update existing ones:

from ascend.resources import ref, snowpark

from snowflake.snowpark import DataFrame as SnowparkDataFrame

from snowflake.snowpark import Session as SnowparkSession

@snowpark(

inputs=[ref("incrementing_data")],

materialized="incremental",

incremental_strategy="merge",

unique_key="key",

merge_update_columns=["string", "ts"],

)

def incremental_transform_snowpark(incrementing_data: SnowparkDataFrame, context) -> SnowparkDataFrame:

assert isinstance(context.session, SnowparkSession)

# inputs should be snowpark dataframes

assert isinstance(incrementing_data, SnowparkDataFrame)

"""

Incremental Snowpark transform that merges on 'key' updating 'string' and 'ts'.

Filters the data to only new records based on max ts.

"""

df = incrementing_data

if context.is_incremental:

# get max ts from existing output table

row = context.session.sql(f"SELECT max(ts) FROM {context.component.name}").collect()[0]

max_ts = row[0]

df = df.filter(df["ts"] > max_ts)

return df

Use the append strategy when you only need to add new records:

from ascend.application.node_runner.snowpark import SnowparkTransformExecutionContext

from ascend.resources import ref, snowpark

from snowflake.snowpark import DataFrame as SnowparkDataFrame

from snowflake.snowpark import Session as SnowparkSession

@snowpark(

inputs=[ref("incrementing_data")],

materialized="incremental",

incremental_strategy="append",

)

def incremental_transform_snowpark_append(incrementing_data: SnowparkDataFrame, context: SnowparkTransformExecutionContext):

"""

Incremental Snowpark append transform that appends only new records (ts > max ts)

"""

assert isinstance(context.session, SnowparkSession)

# inputs will be snowpark dataframes

assert isinstance(incrementing_data, SnowparkDataFrame)

df = incrementing_data

if context.is_incremental:

# context.session will be the snowpark session

# get max ts from existing output table

row = context.session.sql(f"SELECT max(ts) FROM {context.component.name}").collect()[0]

max_ts = row[0]

df = df.filter(df["ts"] > max_ts)

return df

Check out our reference guide for complete parameter options, advanced configurations, and additional examples.

🎉 Congratulations! You've successfully created an Incremental Snowpark Transform in Ascend.