Write to AWS S3

This guide shows you how to create an AWS S3 Write Component.

Prerequisites

- S3 Connection with write permissions

- An Ascend Flow with a Component that contains data

Create a new Write Component

Begin from your workspace Super Graph view. Follow these steps to create your Write Component:

- Using the Component Form

- Using the Files Panel

- Double-click the Flow where you want to create your component

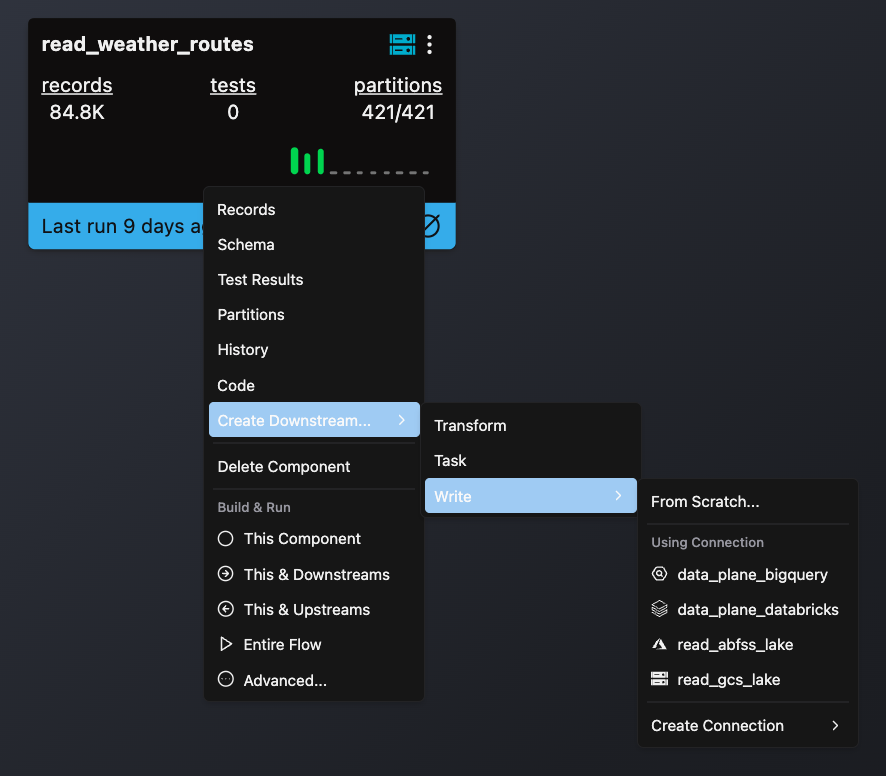

- Right-click on any Component

- Hover over Create Downstream -> Write, and select your target Connection

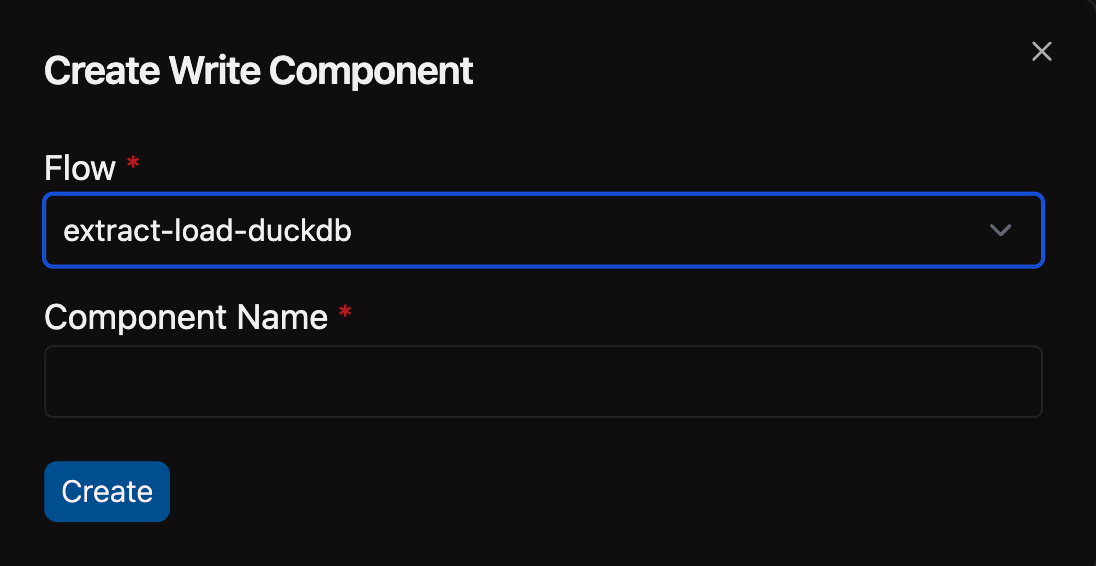

- Complete the form with these details:

- Select your Flow

- Enter a descriptive Component Name like

write_mysql

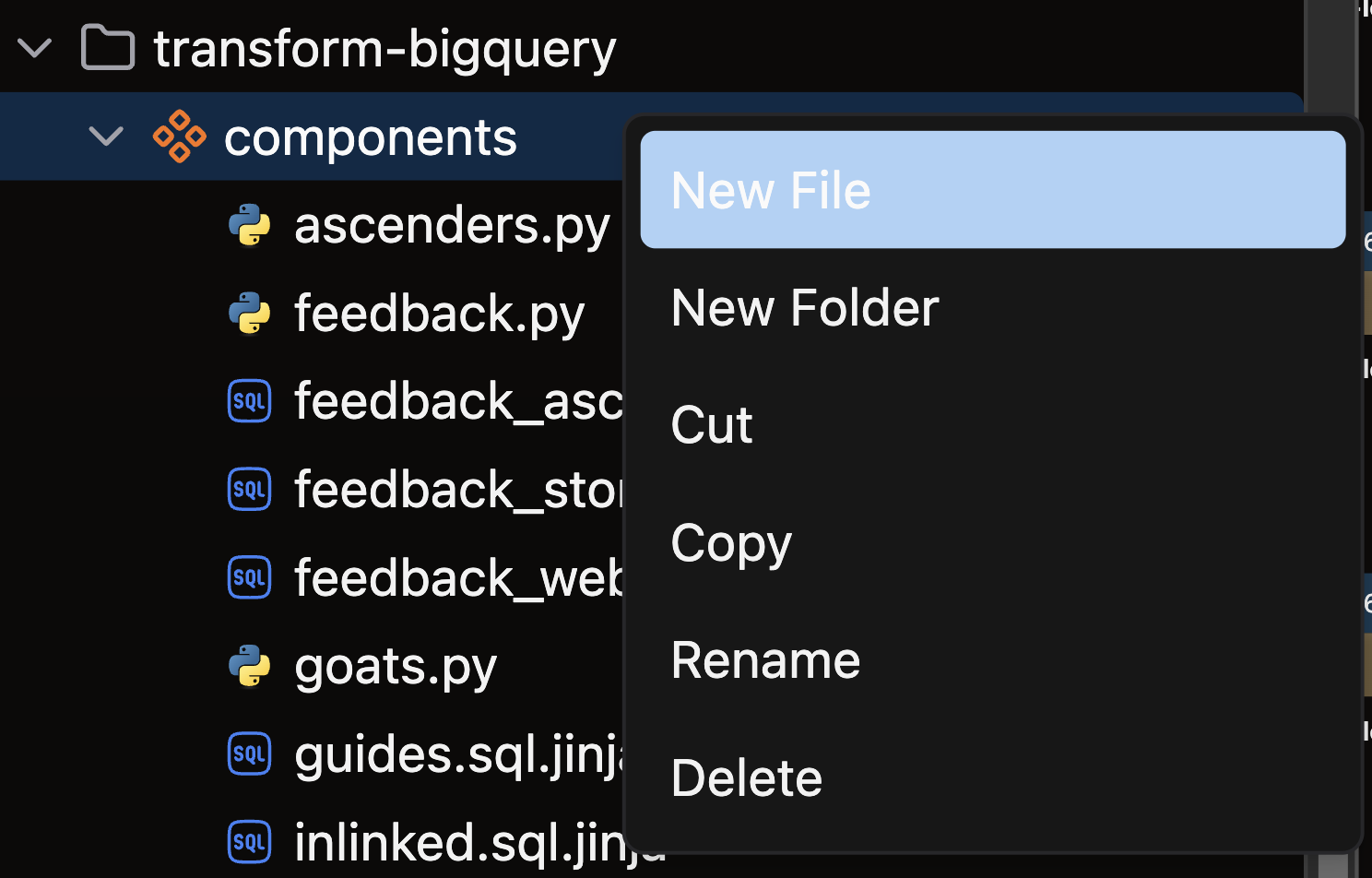

- Open the Files panel in the top left corner

- Navigate to and select your desired Flow

- Right-click on the components directory and choose New file

- Name your file with a descriptive name like

write_mysql.yamland press enter

Configure your S3 Write Component

Follow these steps to set up your S3 Write Component:

- Configure your Connection

- Enter your S3 Connection name in the

connectionfield

- Enter your S3 Connection name in the

- Specify a data source

- Set

inputto the Component that contains your source data

- Set

- Define the write target

- Configure the

s3write connector options - Specify your target table name, schema, and other required properties

- Configure the

- Select a write strategy

Choose one of these strategies based on your use case:

Strategy Description Best For partitionedUpdates only modified partitions Time-series data, regional datasets, date-partitioned data fullReplaces entire target during each Flow Run Reference tables, complete data refreshes snapshotFlexible output - single file or chunked based on path Data exports, analytical datasets, flexible output requirements

For complete details on output format options and when to use each approach, see the write output formats guide.

Examples

Choose the write strategy that best fits your use case:

Partitioned is the default Write strategy, so if you don't specify a strategy as shown here, you'll be using the Partitioned strategy.

- Partitioned

- Full write strategy

- Snapshot (chunked)

- Snapshot (single file)

This example shows an S3 Write Component that uses a partitioned write strategy. Partitioned writes now produce chunked output with multiple files per partition.

component:

write:

connection: write_s3

input:

name: my_component

flow: my_flow

strategy:

partitioned:

mode: append

s3:

path: /some_parquet_dir

formatter: parquet

You can also override the default chunk size of 500K rows and configure a custom chunk size using the part_file_rows field:

component:

write:

connection: write_s3

input:

name: my_component

flow: my_flow

s3:

path: /some_other_dir/

formatter: json

part_file_rows: 1000

Output: Multiple files like data_001.parquet, data_002.parquet, etc. in each partition directory.

This example shows an S3 Write Component that uses a full write strategy. Full writes now produce chunked output for better performance with large datasets.

component:

write:

connection: write_s3

input:

name: my_component

flow: my_flow

strategy:

full:

mode: drop_and_recreate

s3:

path: /some_other_dir/my_data.parquet

formatter: parquet

Output: Multiple files like part_001.parquet, part_002.parquet, etc. in the specified directory.

This example shows an S3 Write Component using snapshot strategy with chunked output and custom chunk size of 1,000 rows per chunk using part_file_rows.

The path ends with a trailing slash (/), producing multiple chunk files.

component:

write:

connection: write_s3

input:

name: my_component

flow: my_flow

strategy: snapshot

s3:

path: /snapshot_data/

formatter: parquet

Output: Multiple files like part_001.parquet, part_002.parquet, etc. in the /snapshot_data/ directory.

This example shows an S3 Write Component using snapshot strategy with single file output. The path ends with a specific filename and extension.

component:

write:

connection: write_s3

input:

name: my_component

flow: my_flow

strategy: snapshot

s3:

path: /snapshot_data/my_snapshot.parquet

formatter: parquet

Output: A single file named my_snapshot.parquet in the /snapshot_data/ directory.

🎉 Congratulations! You successfully created an S3 Write Component in Ascend.